【ReactNative+CloudVision】Created an Angry Face Scoring App

Thank you for your continued support.

This article contains advertisements that help fund our operations.

Table Of Contents

- I thought ReactNative was the best fit for me, so I tried creating an app.

- Packages used

- Using ReactNavigation

- Using the LinearGradient gradient

- Setting up expo-camera

- Getting camera permissions with expo-permissions

- Taking pictures with takePictureAsync()

- Compressing images using expo-image-manipulator

- Analyzing facial expressions with Google Cloud Vision API

This is a note when creating an app using ReactNative+CloudVisionAPI.

I thought ReactNative was the best fit for me, so I tried creating an app.

Goals for this project

- Use the camera

- Compress and use captured images

- Use image analysis API

- Learn UI

I thought of these and set a goal to shape them and build them in a week.

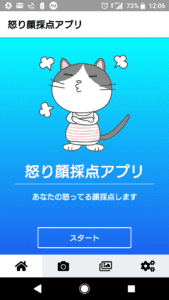

The top page was inspired by other apps

I used a navigator and gradient library, and used a ReactNativeElement button.

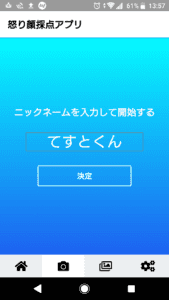

When you start, just enter your name to get started.

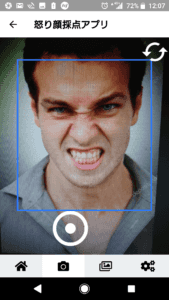

When you enter your name, you will be asked for permission to use the camera, and the camera will start.

The display size of the part the camera is capturing can be changed, and many methods are available, so I tried various things. However, since I need to test it on actual devices, I didn't modify it much.

It's very easy to implement selfie mode.

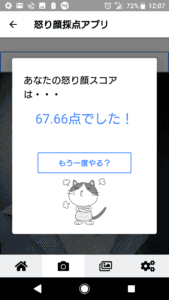

Display the score

I calculate and display the results based on the information sent from the API.

I made it possible to review past photos.

I created this app with the idea of high school and university students making funny faces to score penalty games, and looking back at them later to see how funny they were.

Flow of creating the app

Arranging the Navigator → Coding the TOP page → Taking photos with the camera → Compressing, using the API, and saving → Shaping

The following will be about code and other topics.

Packages used

"@expo/vector-icons": "^10.0.0",

"axios": "^0.19.0",

"expo": "^35.0.0",

"expo-camera": "~7.0.0",

"expo-linear-gradient": "~7.0.0",

"expo-permissions": "~7.0.0",

"firebase": "^7.5.0",

"native-base": "^2.13.8",

"react": "16.8.3",

"react-dom": "16.8.3",

"react-native": "https://github.com/expo/react-native/archive/sdk-35.0.0.tar.gz",

"react-native-elements": "^1.2.7",

"react-native-gesture-handler": "~1.3.0",

"react-native-paper": "^3.2.1",

"react-native-reanimated": "~1.2.0",

"react-native-web": "^0.11.7",

"react-navigation": "^4.0.10",

"react-navigation-material-bottom-tabs": "^2.1.5",

"react-navigation-stack": "^1.10.3",

"react-navigation-tabs": "^2.6.0",

"expo-image-manipulator": "~7.0.0",

"expo-face-detector": "~7.0.0",

"expo-media-library": "~7.0.0"Using ReactNavigation

"react-navigation": "^4.0.10",

"react-navigation-stack": "^1.10.3",

"react-navigation-tabs": "^2.6.0"You can roughly understand how to use it in the article linked above.

I created a tab menu with icons, making the app look like existing apps.

As for the color scheme of the TOP page, it turned out to be different from what I initially created. When I asked others, they gave me advice. I realized that I should ask others for feedback.

import { createAppContainer } from 'react-navigation';

import { createStackNavigator } from 'react-navigation-stack';

import { createBottomTabNavigator } from 'react-navigation-tabs';Currently, you import three components and use them. In the past, they were imported together, but now they are separate.

const ContentStack = createStackNavigator(

{

Front: { screen: FrontScreen }, // Area to enter nickname

Home: { screen: HomeScreen }, // Main content. Vast. I wanted to modularize it, but compromised due to lack of time

Content: { screen: ContentScreen } // Unnecessary argument

},

{

defaultNavigationOptions: {

headerTitle: 'Angry Face Scoring App',

headerTintColor: 'black'

}

}

);Create a stack navigator for each tab, then call it using the following method.

export default createAppContainer(

createBottomTabNavigator(

{

...

},

{

initialRouteName: 'Top', // Actually Top

tabBarOptions: {

showLabel: false,

inactiveBackgroundColor: '#eceff1',

activeBackgroundColor: '#fff'

} // Menu included below here

}

)

);Using the LinearGradient gradient

"expo-linear-gradient": "~7.0.0"You can create a blue background like this.

import { LinearGradient } from 'expo-linear-gradient';

// Inside render method

<LinearGradient

colors={['#05fbff', '#1d62f0']}

style={{

flex: 1,

width: '100%',

justifyContent: 'center',

alignItems: 'center'

}}

>

// Content goes here

</LinearGradient>Setting up expo-camera

"expo-camera": "~7.0.0"I was surprised at how simple it was to take photos.

import { Camera } from 'expo-camera';

// Inside render method

<Camera

style={{ flex: 1 }}

type={this.state.type}

ref={ref=> {

this.camera = ref;

}}

>

// You can also include buttons and other views in here, very versatile.

</Camera>Getting camera permissions with expo-permissions

"expo-permissions": "~7.0.0"Request permission to use the camera at the beginning of the page that will use the camera.

import * as Permissions from 'expo-permissions';

class HomeScreen extends React.Component {

state = {

hasCameraPermission: null

}

async componentDidMount() {

const { status } = await Permissions.askAsync(Permissions.CAMERA);

this.setState({

hasCameraPermission: status === 'granted'

});

}Render method should conditionally return based on the camera permission.

Taking pictures with takePictureAsync()

You can take photos and save them to the phone storage very easily.

snapShot = async () => {

let result = await this.camera.takePictureAsync(); // Just this line can take a photo

}The result object will contain all the photo information. Try console logging it.

Compressing images using expo-image-manipulator

"expo-image-manipulator": "~7.0.0"I used this package to compress images to a size acceptable by the Google Vision API.

import * as ImageManipulator from 'expo-image-manipulator';

const photo = await ImageManipulator.manipulateAsync(

result.uri,

actions,

{

compress: 0.4,

base64: true

}

);The photo object will contain the compressed image in base64 format.

Analyzing facial expressions with Google Cloud Vision API

I fetched the analysis results from the API, scored them, and saved the results to a MySQL database through my own API on a local server.

You need to include your API key and server URI in this code snippet.

This article talked about the process of creating an Angry Face Scoring app using ReactNative and CloudVisionAPI. It detailed the steps involved in setting up the app, using the camera, compressing images, analyzing facial expressions, and saving the results. The author also shared their challenges and learnings while working on the project, highlighting the versatility and capabilities of ReactNative.